When Amazon Web Services (AWS) went dark in its US‑EAST‑1 data‑center cluster early Monday, the ripple effect was felt from a teenager in Delhi trying to finish a Duolingo lesson to a gamer in Stockholm getting booted from a Fortnite match.

AWS outage began at 00:00 PT (03:00 ET) on October 20, 2025, and lingered for roughly three hours, according to the company’s Service Health Dashboard. By mid‑day most services were back online, but the backlog of queued requests forced many platforms to run at reduced speed well into the afternoon.

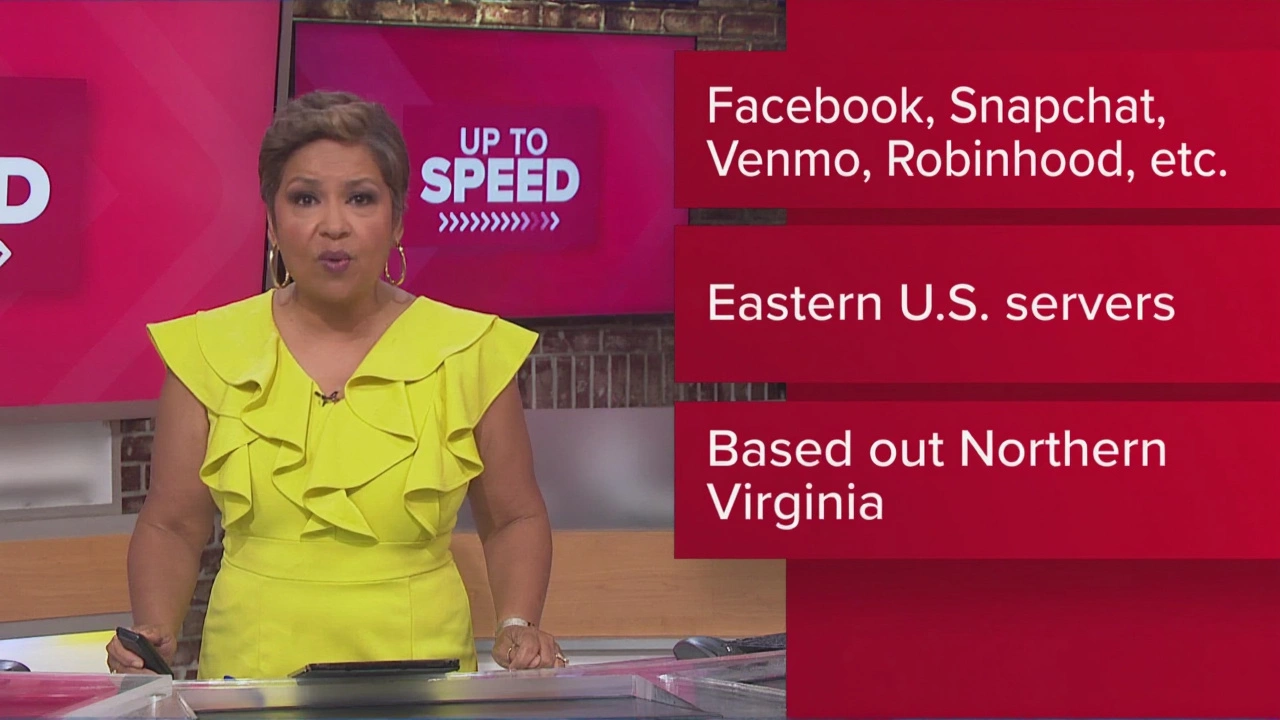

In a nutshell: a DNS resolution failure at the DynamoDB API endpoint, compounded by a regional gateway glitch in the Northern Virginia cluster, knocked out core services like EC2, SQS and DynamoDB. The outage cascaded across dozens of consumer‑facing apps – Snapchat, Roblox, Prime Video, Robinhood, Venmo, Reddit and even the streaming feed of local broadcaster WUSA9 went dark for a stretch. By 06:00 ET AWS announced “significant signs of recovery,” and by 12:00 ET most public‑facing services reported normal latency.

Technical Root Causes and Timeline

According to the post‑mortem released later that day, the first fault was a malfunctioning DNS resolver that could no longer translate the DynamoDB API hostname to an IP address within the US‑EAST‑1 region. Without that translation, Lambda functions that rely on DynamoDB for state‑keeping began to time out, which in turn jammed SQS queues. A second, independent issue emerged when a regional gateway – the hardware that routes traffic between the data‑center’s internal network and the public internet – suffered a firmware timeout, further throttling outbound requests.

Key timestamps:

- 00:00 PT (03:00 ET) – DNS failure detected.

- 01:15 PT – Gateway firmware issue surfaces.

- 03:30 PT – AWS begins parallel mitigation paths.

- 05:45 PT – Majority of EC2 instances restored.

- 06:10 PT – “Significant signs of recovery” communicated.

- 12:00 PT – Full mitigation declared.

While the engineering team rolled out fixes within hours, the sheer volume of pending requests meant a processing lag that persisted for the rest of the day.

Services and Platforms Caught in the Storm

Because AWS powers a staggering slice of the modern web, the outage struck a mixed bag of services:

- Social media: Snapchat users reported being unable to send snaps; Instagram’s story API slowed down.

- Gaming: Fortnite matches dropped players mid‑battle; Roblox servers displayed “Service Unavailable.”

- Finance: Robinhood and Venmo showed error screens when users tried to view balances.

- Streaming: Prime Video buffered indefinitely for new episodes; YouTube’s recommendation engine faltered in several regions.

- Productivity: Slack channels froze; Canva’s design editor lagged.

- Smart home: Amazon Alexa failed to process voice commands for a handful of households.

Downdetector, the crowd‑sourced outage tracker, logged “tens of thousands of reports” across more than 30 distinct services, making this one of the broadest disruptions in its database.

Reactions from AWS and Media Observers

In a terse statement posted at 03:45 ET, AWS wrote, “Based on our investigation, the issue appears to be related to DNS resolution of the DynamoDB API endpoint in US‑EAST‑1. We are working on multiple parallel paths to accelerate recovery.” By 06:20 ET the same page added, “We confirm that we have now recovered processing of SQS queues via Lambda Event Source Mappings. We are now working through processing the backlog of SQS messages in Lambda queues.”

The Hindustan Times highlighted that “several thousand Indian users submitted outage reports” around 12:00 IST, noting that platforms like Snapchat, Canva and Slack were especially hard hit. Meanwhile, WUSA9 confirmed its own streaming service briefly went offline, though it was back up by the 06:00 ET update.

Industry analyst Jonathan Albarran wrote in a post‑mortem blog that the incident “exposes a fundamental fragility in modern digital infrastructure: when AWS goes down, much of the internet goes with it.” He compared the event to the 2020 AWS US‑WEST‑2 outage, noting that the current incident affected a wider, more consumer‑facing set of services.

Implications for Cloud Resilience

The outage reignited a debate that’s been simmering for years: how much should businesses rely on a single cloud provider? The US‑EAST‑1 region, described by ZeroHedge as “one of AWS’s largest data centers,” houses a disproportionate amount of the internet’s backbone.

Experts argue that multi‑cloud or hybrid strategies can mitigate “single‑point‑of‑failure” risks, but the cost and operational complexity remain barriers for smaller firms. Additionally, the DNS layer – often taken for granted – emerged as a vulnerable choke point. Some providers are already exploring DNS‑over‑HTTPS (DoH) and anycast routing to diversify resolution paths.

Regulators in the EU and India have taken note, with the European Commission reportedly planning a review of cloud‑service concentration risks after the incident.

Looking Ahead: What Might Change?

Amazon has pledged to “enhance observability” around its DNS infrastructure and to run “more frequent chaos‑engineering drills” in the US‑EAST‑1 region. Whether those internal measures will translate into measurable resilience for end‑users remains to be seen.

For developers, the outage serves as a reminder to implement graceful degradation: circuit‑breaker patterns, exponential back‑off, and fallback tiers that can reroute traffic to alternative cloud zones or even on‑premises resources.

In the end, the October 20 debacle underscores a simple truth: the cloud is not a magic wand that makes outages impossible. It’s a powerful tool that, when misconfigured or hit by a technical glitch, can knock the lights out on a global stage.

Frequently Asked Questions

What caused the AWS outage in Northern Virginia?

A DNS resolver failure prevented the DynamoDB API endpoint from resolving, and a simultaneous firmware timeout in a regional gateway throttled traffic. Together they stalled EC2, SQS and DynamoDB services, creating a cascade of errors across the US‑EAST‑1 region.

Which major apps were affected and how?

Snapchat couldn’t send messages, Fortnite booted players mid‑match, Robinhood and Venmo showed error screens, Prime Video stalled on new streams, and Slack channels froze. Even smart‑home devices like Amazon Alexa experienced delays in voice command processing.

How long did the outage last and when was service fully restored?

The initial disruption began at 00:00 PT (03:00 ET) and lasted about three hours. AWS announced most services were back by 06:00 ET, and a full mitigation statement was issued around 12:00 PT, though some back‑log processing continued into the afternoon.

What does this outage mean for cloud‑service reliability?

It highlights the concentration risk of relying heavily on a single provider and region. Businesses may look to diversify with multi‑cloud or hybrid setups, and providers are expected to invest in more robust DNS and failover mechanisms.

Will future AWS outages be easier to detect?

Amazon has said it will increase observability of its DNS infrastructure and run more frequent chaos‑engineering drills in the US‑EAST‑1 region. Those steps should improve early detection, but the sheer scale of the service means some incidents will still have widespread impact.

Elias Whitestone

Hello, I'm Elias Whitestone, an expert in the field of education with a passion for writing about poetry and learning experiences. I strive to inspire others through my own creative expression and innovative teaching methods. Having spent years honing my craft, I understand the impact that literature and education can have on individuals and society as a whole. My goal is to help others unlock their potential and foster a love for learning and artistic exploration.

view all postsWrite a comment